Understanding ChatGPT

A summary of ChatGPT and GPT techniques

OpenAI is back in the headlines with news that it is updating its viral ChatGPT with a new version called GPT-4. If ChatGPT is the car, then GPT-4 is the engine: a powerful general technology that can be shaped down to a number of different uses. GPT-3 and now 4 are actually the internet’s best-known language-processing AI models.

What is ChatGPT?

ChatGPT is a large language model developed by OpenAI, which is based on the GPT (Generative Pre-trained Transformer) architecture. It is designed to understand natural language, generate human-like responses, and complete a variety of language tasks including language translation, text summarization, question-answering and more. It has been trained on a massive amount of text data from the internet, books, and other sources, allowing it to learn and understand a wide range of topics and provide informative and engaging responses.

What is GPT?

GPT (Generative Pre-trained Transformer) is a type of neural network architecture used for natural language processing (NLP) tasks, such as language modelling, text classification, and machine translation. It was first introduced by OpenAI in 2018 and has been used in a wide variety of applications.

The GPT architecture is based on the Transformer model, which was introduced in 2017 by Vaswani et al.

GPT has been a major breakthrough in natural language processing and the version GPT-3 has 175 billion parameters. It has been used to achieve state-of-the-art results on a wide variety of language tasks and has been adopted by a variety of industries for language-related applications such as chatbots, text summarization, and language translation. Its ability to pre-train large amounts of data and adapt to new tasks with few examples makes it a highly versatile and valuable tool for language-related applications.

GPT Models

- GPT-1 is available here with paper “Improving Language Understanding by Generative Pre-Training”.

- GPT-2 is open-source here with paper “Language Models are Unsupervised Multitask Learners”.

- GPT-3 has published paper

. - There are several open-source GPT alternatives such as LLaMA by Meta AI with paper

. - GPT-4 is not open-source but its general introduction is here.

The first version of GPT was introduced based on the ideas of transformers

A good website for detailed learning can be found here and unsupervised pre-training. Its results provide a convincing example that pairing supervised learning methods with unsupervised pre-training works very well. In summary, GPT as the name suggested, adopts generative pre-training of a language model on a diverse corpus of unlabeled text, followed by discriminative supervised fine-tuning on each specific task..

Generative pre-training

The term generative pre-training represents the unsupervised pre-training of the generative model.

where $k$ is the size of the context window, and the conditional probability $P$ is modelled using a neural network with parameters $\Theta$ trained using stochastic gradient descent. Intuitively, we train the Transformer-based model to predict the next token within the $k$-context window using unlabeled text from which we also extract the latent features $h$.

Supervised fine-tuning

After training the model with the objective function above, they adapt the parameters to the supervised target task which refers to supervised fine-tuning. Assume a labelled dataset $\mathcal{C}$, where each instance consists of a sequence of input tokens, $x^1,\dots, x^m$, along with a label $y$. The inputs are passed through the pre-trained model to obtain the final transformer block’s activation $h_l^m$, which is then fed into an added linear output layer with parameters $W_y$ to predict $y$:

\[P(y\mid x^1,\dots,x^m)=softmax(h_l^mW_y).\]This gives us the following objective to maximize:

\[L_2(\mathcal{C})=\sum_{(x,y)}\log P(y\mid x^1,\dots,x^m)\]They additionally found that including language modelling as an auxiliary objective to the fine-tuning helped learning by (a) improving the generalization of the supervised model, and (b) accelerating convergence. Specifically, we optimize the following objective (with weight $\lambda$): $L_3(\mathcal{C})=L_2(\mathcal{C})+\lambda*L_1(\mathcal{C})$.

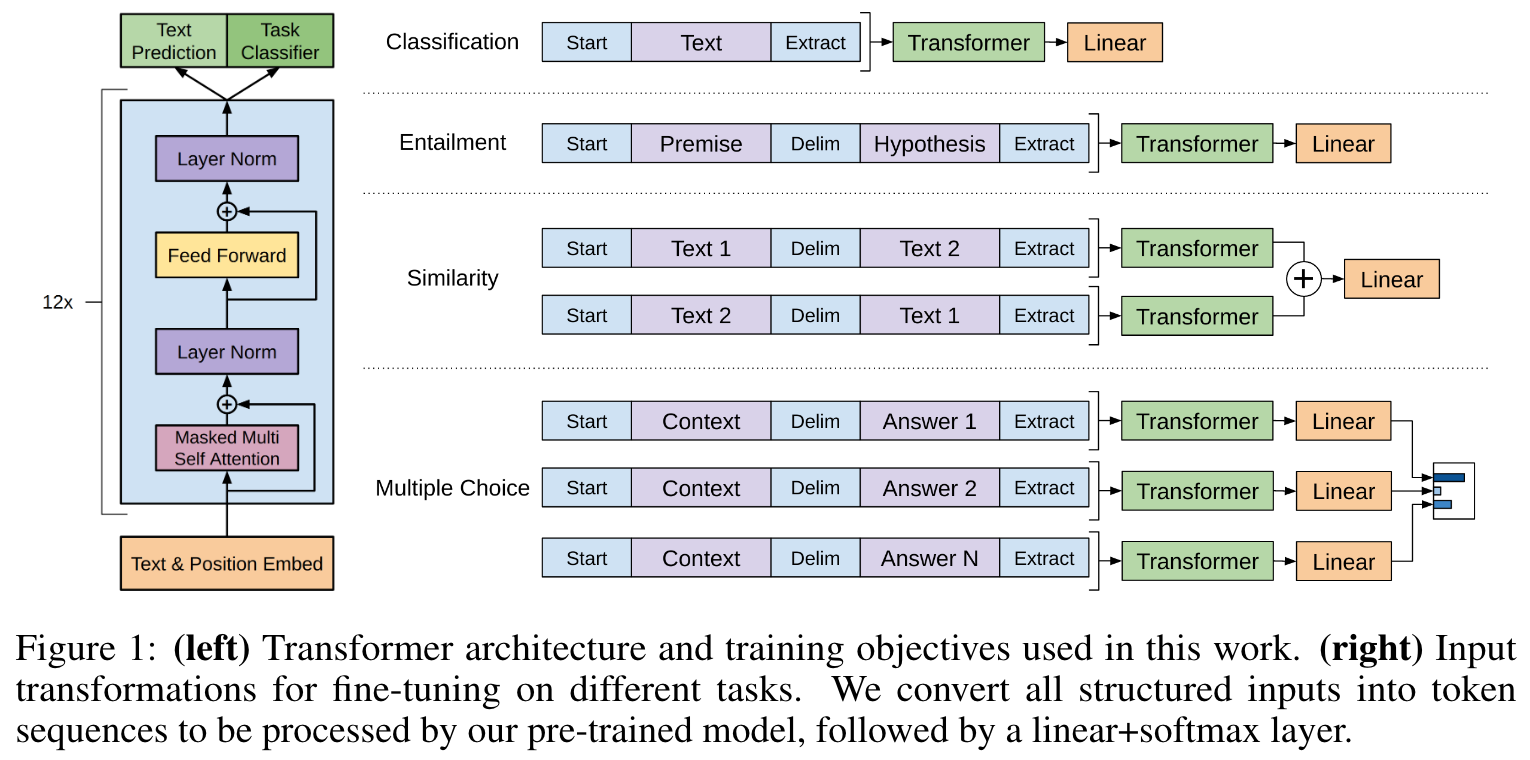

Some tasks, like question answering or textual entailment, have structured inputs such as ordered sentence pairs, or triplets of document, questions, and answers that are different from the contiguous sequences of text inputs of the pre-trained model so they require some modifications to apply GPT. This results in the input transformations which allow us to avoid making extensive changes to the architecture across tasks. A brief description of these input transformations is shown in Figure 1 (credit to the paper).

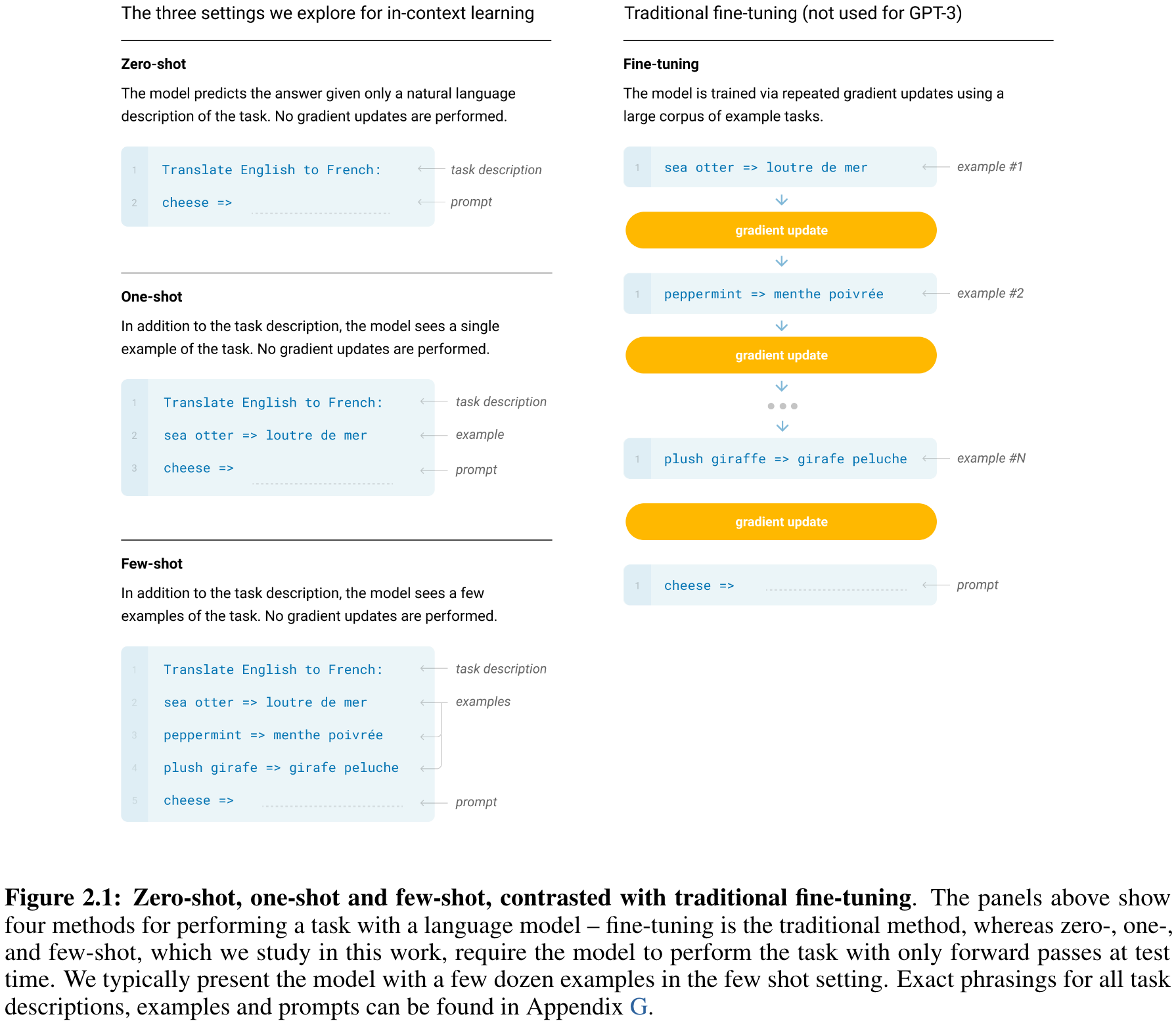

GPT-3 was applied with tasks and few-shot demonstrations specified purely via text interaction with the model. Since fine-tuning involves updating the weights of a pre-trained model by training on a supervised dataset specific to the desired task, it typically requires thousands to hundreds of thousands of labelled examples. However, the main disadvantages are the need for a new large dataset for every task, the potential for poor generalization out-of-distribution, and the potential to exploit spurious features of the training data, potentially resulting in an unfair comparison with human performance.

Few-shot learning

Few-Shot is the term referring to the setting where the model is given a few demonstrations of the task at inference time as conditioning, but no weight updates are allowed. Few-shot learning involves learning based on a broad distribution of tasks (in this case implicit in the pre-training data) and then rapidly adapting to a new task. The primary goal in traditional Few-Shot frameworks is to learn a similarity function that can map the similarities between the classes in the support and query sets.

Figure 2.1

The main advantages of few-shot are a major reduction in the need for task-specific data and a reduced potential to learn an overly narrow distribution from a large but narrow fine-tuning dataset. The main disadvantage is that results from this method have so far been much worse than state-of-the-art fine-tuned models. Also, a small amount of task-specific data is still required.

Improvements of GPTs

GPT-1 employs the idea of unsupervised learning for training representations of words using large amounts of unlabeled data consisting of terabytes of information and then integrates supervised learning for fine-tuning to improve performance on a wide range of NLP tasks. However, it has drawbacks including (1) Compute requirements (expensive pre-training step) (2) The limits and bias of learning about the world through text, and (3) Still brittle generalization.

GPT-2 is a larger model with 1.5 billion parameters following the details of GPT-1 (117 million parameters) with a few modifications

GPT-3 uses a variety of techniques to improve performance, including:

- Larger size: has 175 billion parameters which allows to capture of even more complex language patterns and relationships.

- Adaptive computation: dynamically adjusts the number of parameters used for each task, allowing it to allocate more resources to complex tasks and fewer resources to simpler tasks.

- Few-shot learning: learns to perform a new task with just a few examples, making it highly flexible and adaptable to new tasks and contexts.

- Prompt engineering: can be given a natural language prompt to generate text that fits a specific context or follows a specific style.

Limitations of Generative AI

- Generative AI tools are language machines rather than databases of knowledge – they work by predicting the next plausible word or section of programming code from patterns that have been ‘learnt’ from large data sets;

- AI tools have no understanding of what they generate. A knowledgeable human must check the work (often in iterations);

- The data sets that such tools are learning from are flawed and contain inaccuracies, biases and limitations;

- They generate text that is not always factually correct;

- They can create software/code that has security flaws, bugs, and use illegal libraries or calls – or infringe copyrights;

- Often the code or calculation produced by AI will look plausible but contain errors in detailed working on closer inspection. A human trained in that programming language should fully check any code or calculation produced in this way;

- The data their models are trained on is not up-to-date – they currently have limited or constrained data on the world and events after a certain point (2021 in the case of ChatGPT);

- They can generate offensive content;

- They produce fake citations and references;

- Such systems are amoral - they don’t know that it is wrong to generate offensive, inaccurate or misleading content;

- They include hidden plagiarism – meaning that they make use of words and ideas from human authors without referencing them, which we would consider as plagiarism;

- There are risks of copyright infringements on pictures and other copyrighted material.